There is a theory which states that if ever anyone discovers exactly what the business world is for, it will instantly disappear and be replaced by something even more bizarre and inexplicable. There is another theory which states that this has already happened. This certainly goes a long way to explaining the current corporate strategy for dealing with Artificial Intelligence, which is to largely ignore it, in the same way that a startled periwinkle might ignore an oncoming bulldozer, hoping that if it doesn’t make any sudden moves the whole “unsettling” situation will simply settle down.

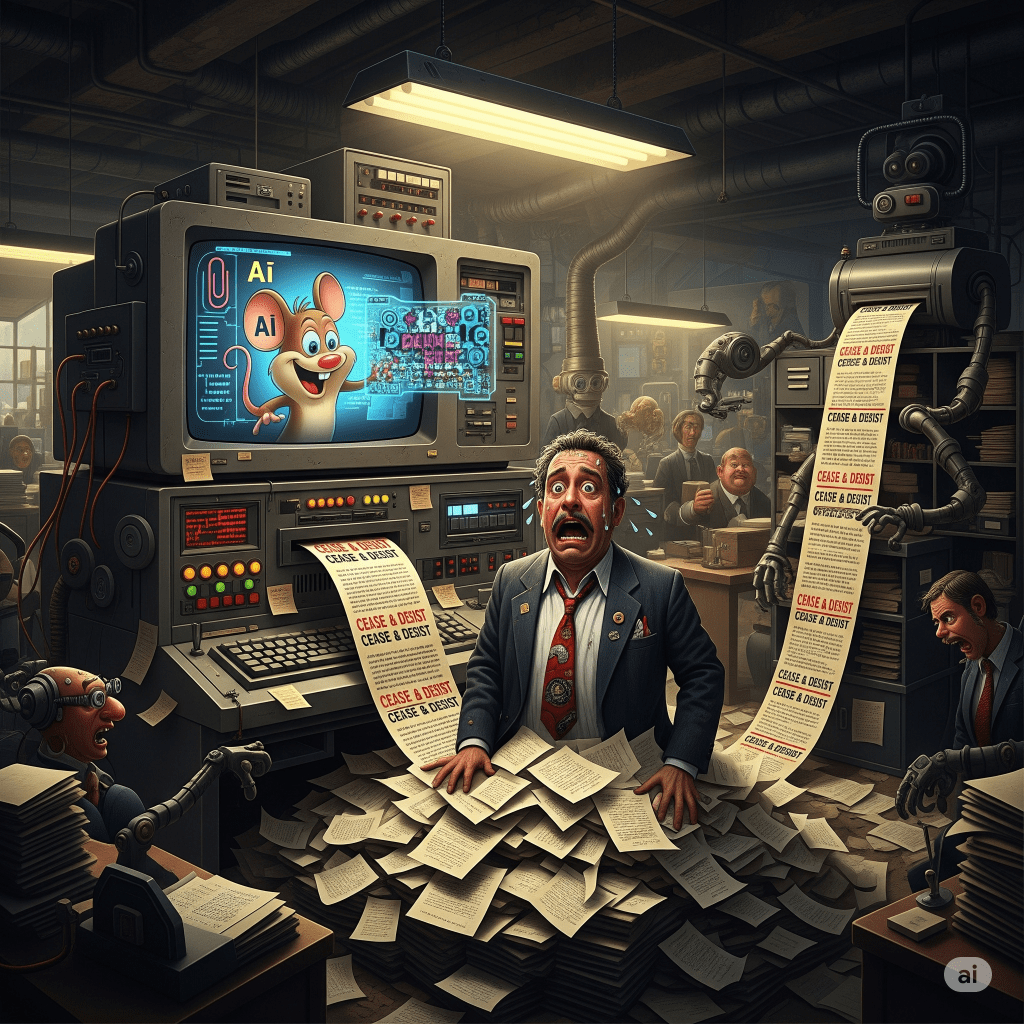

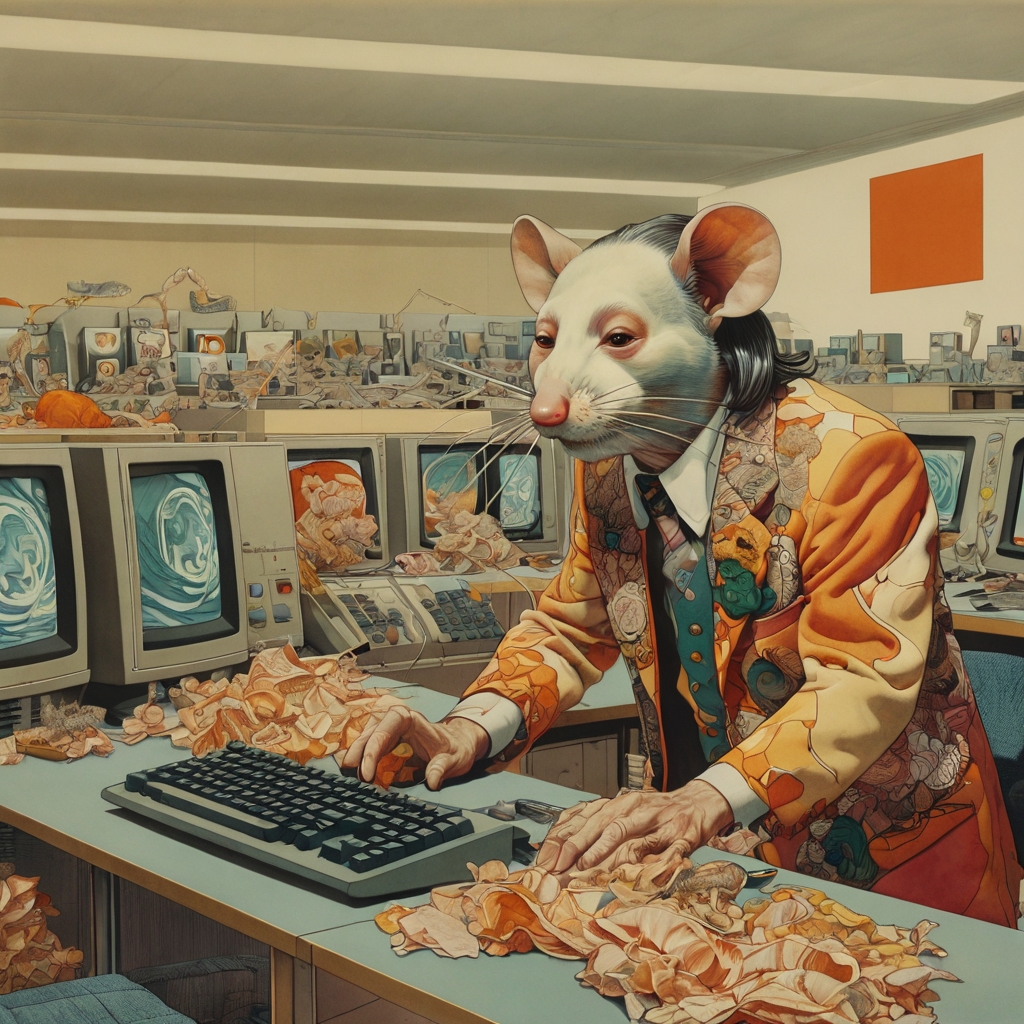

This is, of course, a terrible strategy, because while everyone is busy not looking, the bulldozer is not only getting closer, it’s also learning to draw a surprisingly good, yet legally dubious, cartoon mouse.

We live in an age of what is fashionably called “Agile,” a term which here seems to mean “The Art of Controlled Panic.” It’s a frantic, permanent state of trying to build the aeroplane while it’s already taxiing down the runway, fueled by lukewarm coffee and a deep-seated fear of the next quarterly review. For years, the panic-release valve was off-shoring. When a project was on fire, you could simply bundle up your barely coherent requirements and fling them over the digital fence to a team in another time zone, hoping they’d throw back a working solution before morning.

Now, we have perfected this model. AI is the new, ultimate off-shoring. The team is infinitely scalable, works for pennies, and is located somewhere so remote it isn’t even on a map. It’s in “The Cloud,” a place that is reassuringly vague and requires no knowledge of geography whatsoever.

The problem is, this new team is a bit weird. You still need that one, increasingly stressed-out human—let’s call them the Prompt Whisperer—to translate the frantic, contradictory demands of the business into a language the machine will understand. They are the new middle manager, bridging the vast, terrifying gap between human chaos and silicon logic. But there’s a new, far more alarming, item in their job description.

You see, the reason this new offshore team is so knowledgeable is because it has been trained by binge-watching the entire internet. Every film, every book, every brand logo, every cat picture, and every episode of every cartoon ever made. And as the ongoing legal spat between the Disney/Universal behemoth and the AI art platform Midjourney demonstrates, the hangover from this creative binge is about to kick in with the force of a Pan Galactic Gargle Blaster.

The issue, for any small business cheerfully using an AI to design their new logo, is one of copyright. In the US, they have a principle called “fair use,” which is a wonderfully flexible and often confusing set of rules. In the UK, we have “fair dealing,” which is a narrower, more limited set of rules that is, in its own way, just as confusing. If the difference between the two seems unclear, then congratulations, you have understood the central point perfectly: you are almost certainly in trouble.

The AI, you see, doesn’t create. It remixes. And it has no concept of ownership. Ask it to design a logo for your artisanal doughnut shop, and it might cheerfully serve up something that looks uncannily like the beloved mascot of a multi-billion-dollar entertainment conglomerate. The AI isn’t your co-conspirator; it’s the unthinking photocopier, and you’re the one left holding the legally radioactive copy. Your brilliant, cost-effective branding exercise has just become a business-ending legal event.

So, here we are, practicing the art of controlled panic on a legal minefield. The new off-shored intelligence is a powerful, dangerous, and creatively promiscuous force. That poor Prompt Whisperer isn’t just briefing the machine anymore; they are its parole officer, desperately trying to stop it from cheerfully plagiarizing its way into oblivion. The only thing that hasn’t “settled down” is the dust from the first wave of cease-and-desist letters. And they are, I assure you, on their way.