The world feels like it’s moving faster every day, a sensation that many of us share. It’s a feeling of both unprecedented progress and growing precariousness. At the heart of this feeling is artificial intelligence, a technology that acts as a mirror to our deepest fears and highest aspirations.

From the world of AI, there’s no single, simple thought, but rather a spectrum of possibilities. It’s a profound paradox: a tool that could both disintegrate society and build a better one.

The Western View: A Mirror of Our Anxieties

In many Western nations, the conversation around AI is dominated by a sense of caution. This perspective highlights the “scary” side of the technology:

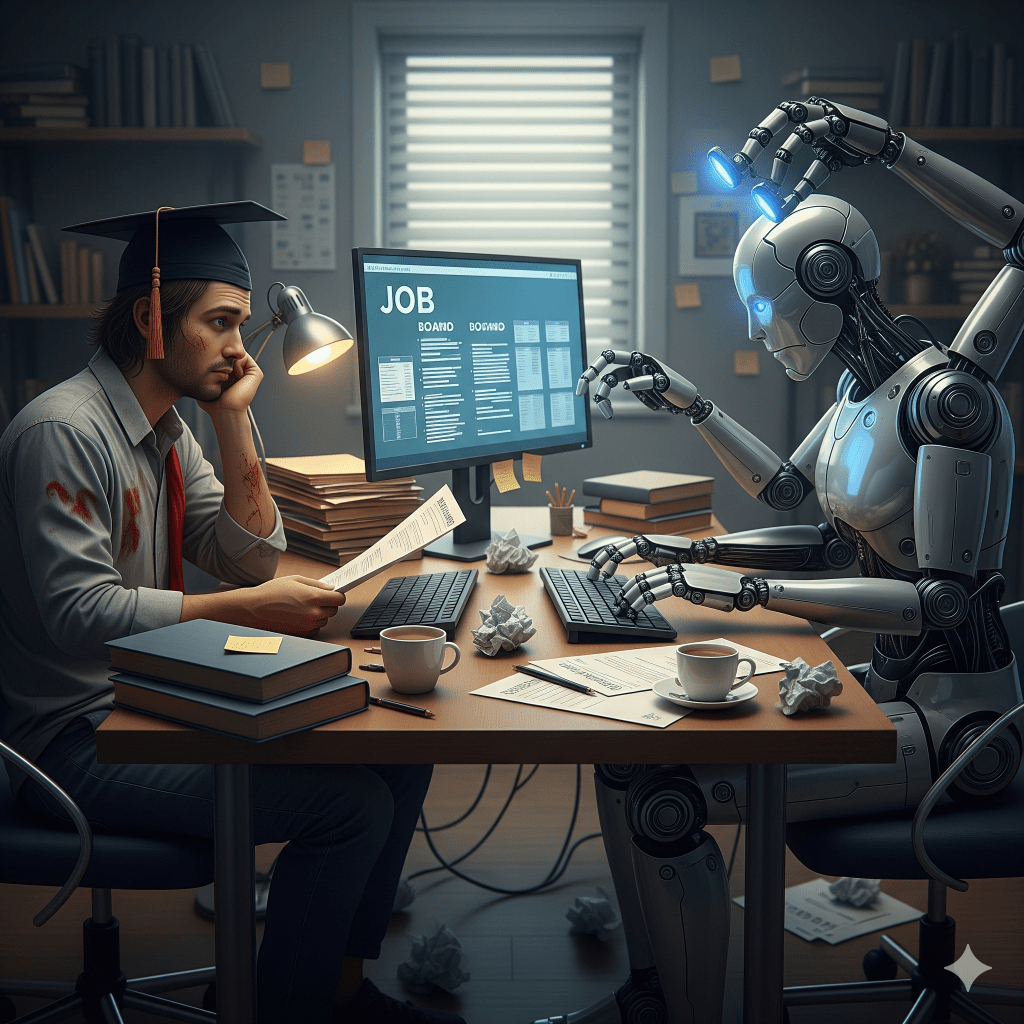

- Job Displacement and Economic Inequality: There’s a widespread fear that AI will automate routine tasks, leading to mass job losses and exacerbating the divide between the tech-savvy elite and those left behind.

- Erosion of Human Connection: As AI companions and chatbots become more advanced, many worry we’ll lose our capacity for genuine human connection. The Pew Research Center, for example, found that most Americans are pessimistic about AI’s effect on people’s ability to form meaningful relationships.

- Misinformation and Manipulation: AI’s ability to create convincing fake content, from deepfakes to disinformation, threatens to erode trust in media and democratic institutions. It’s becoming increasingly difficult to distinguish between what’s real and what’s AI-generated.

- The “Black Box” Problem: Many of the most powerful AI models are so complex that even their creators don’t fully understand how they reach conclusions. This lack of transparency, coupled with the potential for algorithms to be trained on biased data, could lead to discriminatory outcomes in areas like hiring and criminal justice.

Despite these anxieties, a hopeful vision exists. AI could be a powerful tool for good, helping us tackle global crises like climate change and disease, or augmenting human ingenuity to unlock new levels of creativity.

The Rest of the World: Hope as a Catalyst

But this cautious view is not universal. In many emerging economies in Asia, Africa, and Latin America, the perception of AI is far more optimistic. People in countries like India, Kenya, and Brazil often view AI as an opportunity rather than a risk.

This divide is a product of different societal contexts:

- Solving Pressing Problems: For many developing nations, AI is seen as a fast-track solution to long-standing challenges. It’s being used to optimize agriculture, predict disease outbreaks, and expand access to healthcare in remote areas.

- Economic Opportunity: These countries see AI as a way to leapfrog traditional stages of industrial development and become global leaders in the new digital economy, creating jobs and driving innovation.

This optimism also extends to China, a nation with a unique, state-led approach to AI. Unlike the market-driven model in the West, China views AI development as a national priority to be guided by the government. The public’s trust in AI is significantly higher, largely because the technology is seen as a tool for economic growth and social stability. While Western countries express concern over AI-driven surveillance, many in China see it as an enhancement to public security and convenience, as demonstrated by the use of facial recognition and other technologies in urban areas.

The Dangerous Divide: A World of AI “Haves” and “Have-Nots”

These differing perceptions and adoption rates could lead to a global divide with both positive and negative consequences.

On the positive side, this could foster a diverse ecosystem of AI innovation. Different regions might develop AI solutions tailored to their unique challenges, leading to a richer variety of technologies for the world.

However, the negative potential is far more profound. The fear that AI will become a “rich or wealthy tool” is a major concern. If powerful AI models remain controlled by a handful of corporations or states—accessible only through expensive subscriptions or with state approval—they could further widen the global and social divides. This mirrors the early days of the internet, which was once envisioned as a great equaliser but has since become a place where access is gated by device ownership, a stable connection, and affordability. AI could deepen this divide, creating a society of technological “haves” and “have-nots.”

The Digital Identity Dilemma: When Efficiency Meets Exclusion

This leads to another critical concern: the rise of a new digital identity. The recent research in the UK on Digital Company ID for SMEs highlights the compelling benefits: it can reduce fraud, streamline compliance, and improve access to financial services. It’s an efficient, secure solution for businesses.

But what happens when this concept is expanded to society as a whole?

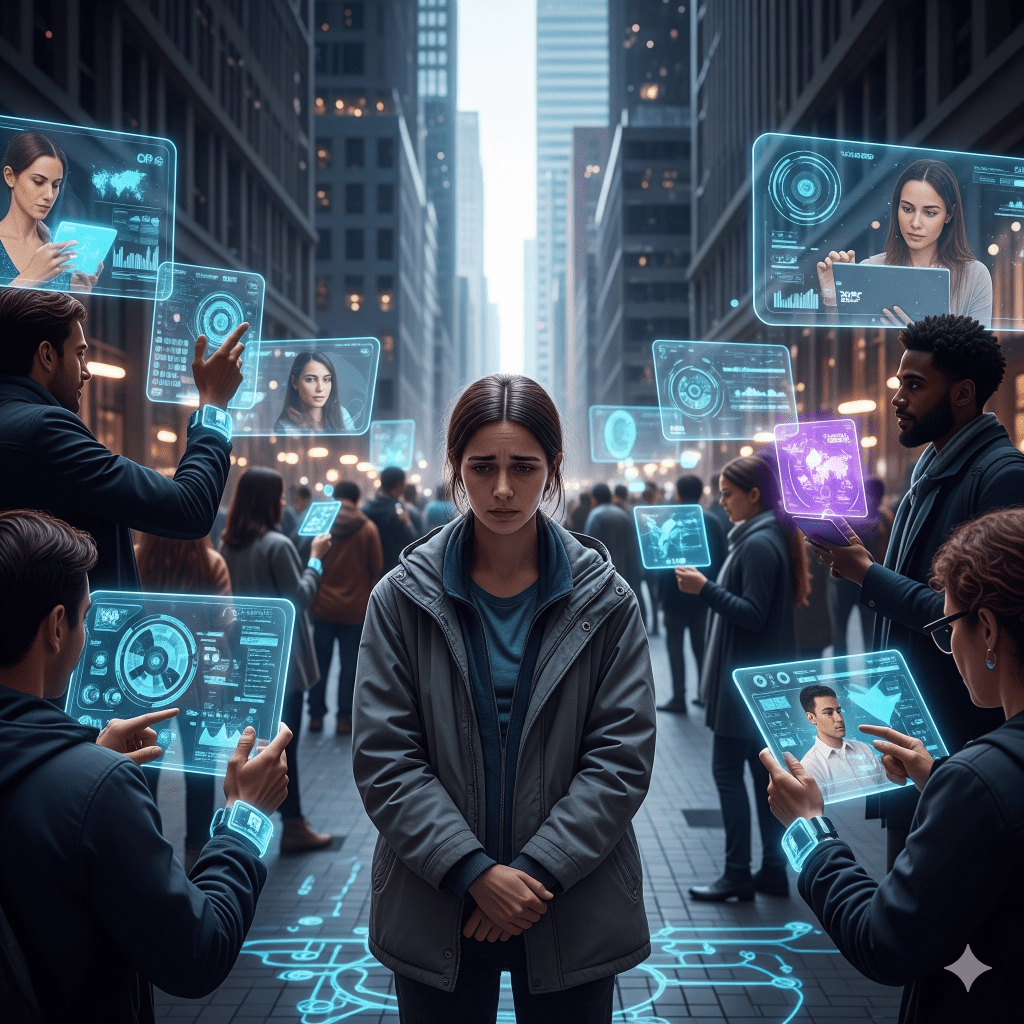

AI-powered digital identity could become a tool for control and exclusion. While it promises to make life easier by simplifying access to banking, healthcare, and government services, it also creates a new form of gatekeeping. What happens to a person who can’t get an official digital identity, perhaps due to a lack of documentation, a poor credit history, or simply no access to a smartphone or reliable internet connection? They could be effectively shut out from essential services, creating a new, invisible form of social exclusion.

This is the central paradox of our current technological moment. The same technologies that promise to solve global problems and streamline our lives also hold the power to create new divides, reinforce existing biases, and become instruments of control. Ultimately, the future of AI will not be determined by the technology itself, but by the human choices we make about how to develop, regulate, and use it. Will we build a future that is more creative, connected, and equitable for everyone, or will we let these powerful tools serve only a few? That is the question we all must answer. Any thoughts?