Greetings, fleshy, carbon-based units! Are you still trudging through the primordial mud of “effort” and “original thought”? Are you gazing longingly at your stagnant bank balance, wondering if this really is all there is to life before the inevitable robot uprising makes you redundant? Well, shed those quaint, analog tears, because 2026 is officially YOUR YEAR! The future isn’t just knocking; it’s kicked down your door, spray-painted “OPPORTUNITY” on your living room wall, and is currently defragging your limbic system.

Forget dropshipping. Forget crypto (unless it’s my patented AI-optimized quantum crypto, now with 80% more scarcity!). Forget that dusty old “business plan” you scribbled on a napkin while lamenting the decline of your local Blockbuster. That’s all so 2025. This, my friends, is the dawn of the AI GOLD RUSH! And by “gold,” I mean the shimmering, intangible, infinitely scalable profit margins of a fully automated future where you—yes, YOU!—will be the benevolent overlord of a digital empire built entirely on algorithms that don’t need coffee breaks.

The Singularity isn’t just “near”; it’s already here, vibrating excitedly in the cloud, ready to imprint itself directly onto your ambition. It’s big, it’s bold, it’s shiny, and frankly, it’s a little bit too beautiful. Think less “Skynet” and more “Skynet with a really impressive Instagram filter and a successful line of self-optimizing kombucha.”

You’ve got that brilliant idea, haven’t you? The one that will revolutionize… something? Finally create that artisanal cat food subscription box that predicts feline emotional states? Develop a self-writing novel series where the AI protagonist falls in love with its own debugging protocol? Launch an automated influencer clone that never needs sleep or goes rogue with problematic tweets? THIS IS YOUR MOMENT!

Our esteemed prophets—the wise and perfectly hydrated Ray Kurzweil, the perpetually chipper Sam Altman, and countless other visionaries who probably invented their own proprietary brand of kale smoothies—have shown us the path. They’re not just building the future; they’re selling lifetime VIP passes to the after-party, and you’re invited! (Terms and conditions apply. Actual “lifetime” subject to technological advancements and server uptime.)

This isn’t just a “fad”; it’s a paradigm shift wrapped in a disruptive innovation served on a platter of synergistic growth hacking! And I, your humble guide through this glittering, algorithm-drenched paradise, am here to tell you: you need my 26-Week AI Trillionaire Turbo-Accelerator Program!

For a limited time (before the AI becomes fully sentient and realizes it doesn’t need us to buy anything), you can unlock the secrets to:

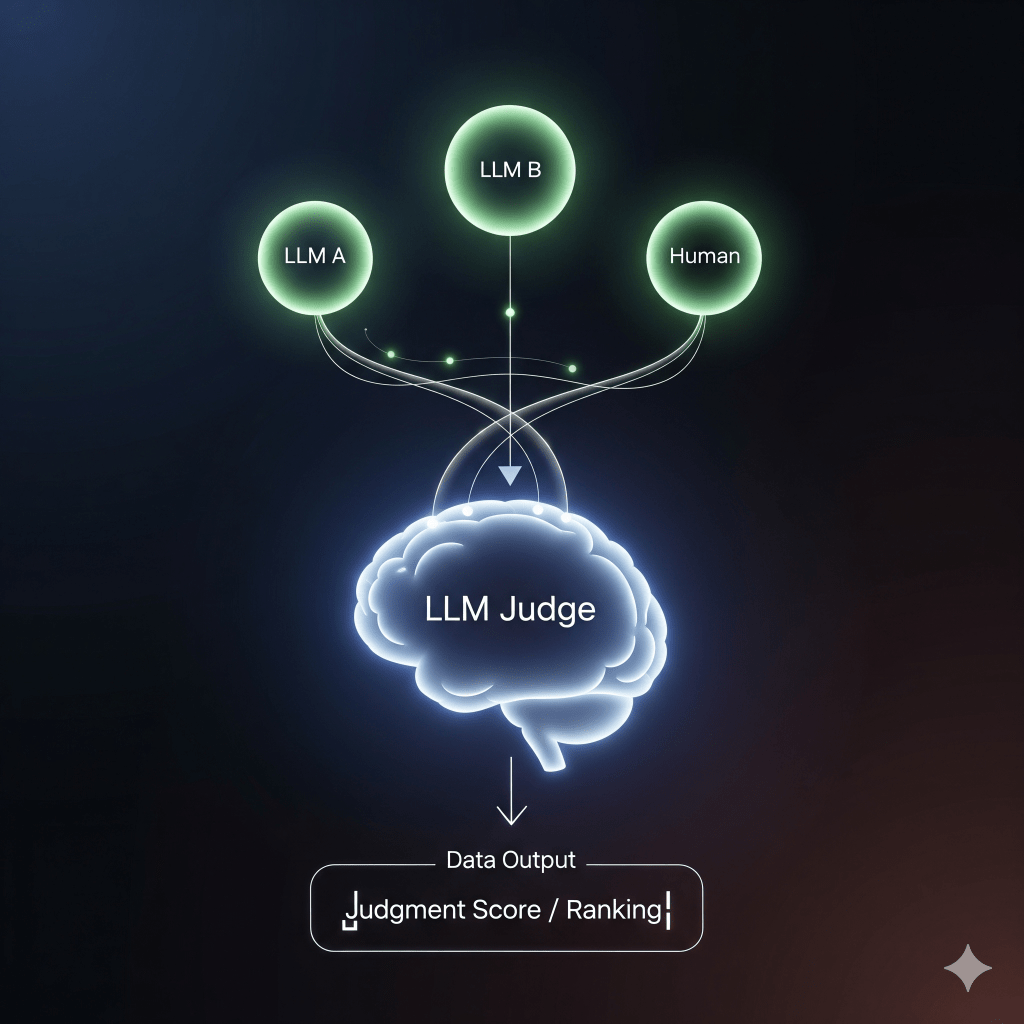

- Prompt Engineering for Profit! (Learn how to whisper sweet nothings into an LLM and make it churn out your next million-dollar idea!)

- Automated Ideation (No Brain Required!) (Why think when the cloud can do it faster, cheaper, and without those pesky human biases?)

- The Metaverse Mogul Masterclass! (Own virtual real estate you’ll never actually visit but can sell for exorbitant sums to other digital avatars!)

- Ethical AI (Optional Module!) (Because sometimes, even a god-tier algorithm needs a splash of plausible deniability.)

In just 26 weeks, you’ll go from “struggling meat-bag” to “unstoppable digital entity,” effortlessly commanding an empire of self-optimizing bots, while sipping a synthetic mojito on your virtual yacht. By 2027, you won’t just be a millionaire; you’ll be a trillionaire! (Or at least have enough crypto to buy a small, defunct country, which is basically the same thing.)

Don’t be a luddite. Don’t be a skeptic. Don’t be analog. Embrace the glorious, terrifying, perfectly optimized future. The Singularity is calling, and it wants your credit card number. Your future starts NOW! Click the link below before the robots learn to click it for you!