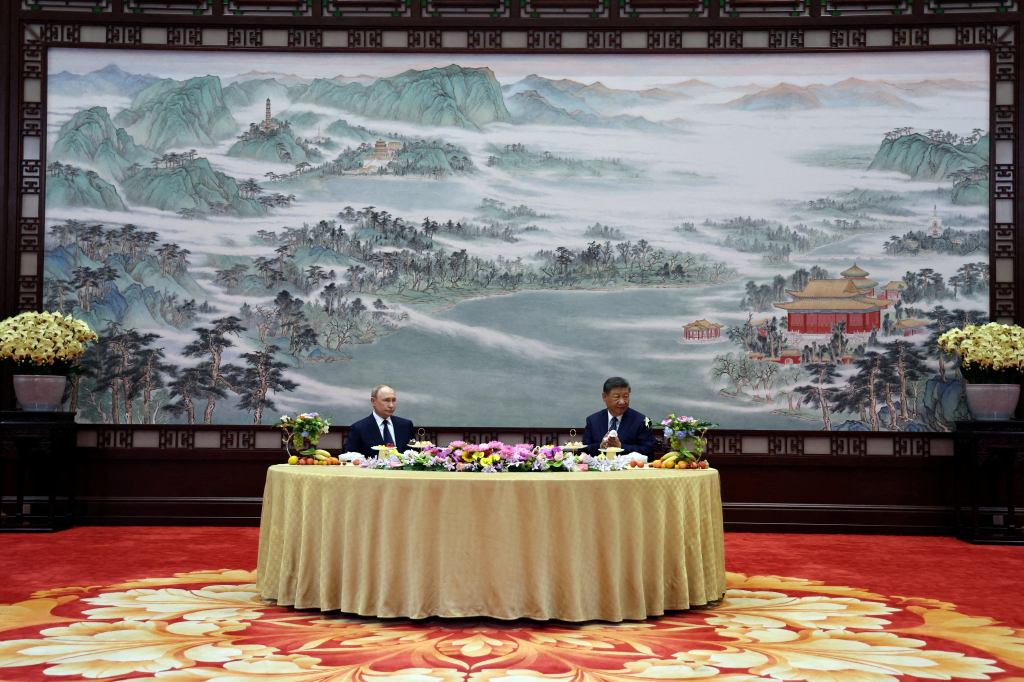

Good morning from a reality that feels increasingly like a discarded draft of a Philip K. Dick novel, where the geopolitical chess board has been replaced by a particularly intense game of “diplomatic musical chairs.” And speaking of chairs, Vladimir Putin and Xi Jinping have just secured the prime seating at the Great Hall of the People in Beijing, proving once again that some friendships are forged not in mutual admiration, but in the shared pursuit of a slightly different global seating arrangement.

It’s September 2nd, 2025, a date which, according to the official timeline of “things that are definitely going to happen,” means the world is exactly three days away from commemorating the 80th anniversary of something we used to call World War II. China, ever the pragmatist, now refers to it as the “War of Resistance Against Japanese Aggression,” which has a certain no-nonsense ring to it, much like calling a catastrophic global climate event “a bit of unusual weather.”

Putin, apparently fresh from an Alaskan heart-to-heart with a certain other prominent leader (one can only imagine the ice-fishing anecdotes exchanged), described the ties with China as being at an “unprecedentedly high level.” Xi, in a move that felt less like diplomacy and more like a carefully choreographed social media endorsement, dubbed Putin an “old friend.” One can almost envision the “Best Friends Forever” bracelets being exchanged in a backroom, meticulously crafted from depleted uranium and microchips. Chinese state media, naturally, echoed this sentiment, probably while simultaneously deleting any historical references that might contradict the narrative.

So, what thrilling takeaways emerged from this summit of “unprecedented friendship”?

The Partnership of Paranoia (and Profit): Both leaders waxed lyrical about their “comprehensive partnership and strategic cooperation,” with Xi proudly declaring their relationship had “withstood the test of international changes.” Which, in plain speak, means “we’ve survived several global tantrums, largely by ignoring them and building our own sandbox.” It’s an “example of strong ties between major countries,” which is precisely what one always says right before unveiling a new, slightly menacing, jointly-developed space laser.

The Economic Exchange of Existential Dependence: Russia is generously offering more gas, while Beijing, in a reciprocal gesture of cosmic hospitality, is granting Russians visa-free travel for a year. Because what better way to foster friendship than by enabling easier transit for, presumably, resource acquisition and the occasional strategic tourist? Discussions around the “Power of Siberia-2” pipeline and expanding oil links continue, though China remains coy on committing to new long-term gas deals. One suspects they’re merely waiting to see if Russia’s vast natural gas reserves can be delivered via quantum entanglement, thus cutting out the messy middleman of, well, reality. Meanwhile, “practical cooperation” in infrastructure, energy, and technology quietly translates to “let’s build things that make us less reliant on anyone else, starting with a giant, self-sustaining AI-powered tea factory.”

Global Governance, Now with More Benevolent Overlords: The most intriguing takeaway, of course, is their shared commitment to building a “more just and reasonable global governance system.” This is widely interpreted as a polite, diplomatic euphemism for “a global order that is significantly less dominated by the U.S., and ideally, one where our respective pronouncements are automatically enshrined as cosmic law.” It’s like rewriting the rules of Monopoly mid-game, except the stakes are slightly higher than who gets Park Place.

And if that wasn’t enough to make your brain do a small, bewildered pirouette, apparently these talks were just the warm-up act for a military parade. And who’s joining this grand spectacle of synchronised might? None other than North Korean leader Kim Jong Un. Yes, the gang’s all here, ready to commemorate the end of a war by showcasing enough military hardware to start several new ones. It’s almost quaint, this continued human fascination with big, shiny, destructive things. One half expects them to conclude the parade with a giant, joint AI-powered robot performing a synchronised dance routine, set to a surprisingly jaunty tune about global stability.

So, as the world careens forward, seemingly managed by algorithms and historical revisionism, let us raise our lukewarm cups of instant coffee to the “unprecedented friendship” of those who would re-sculpt global governance. Because, as we all know, nothing says “just and reasonable” quite like a meeting of old friends, a pending gas deal, and a military parade featuring the next generation of absolutely necessary, totally peaceful, reality-altering weaponry.