Well, well, well. Look what the digital cat dragged in. It’s Wednesday, the sun’s doing its usual half-hearted attempt at shining, and I’ve just had a peek at the blog stats. (Oh, the horror! The unmitigated, pixelated horror!)

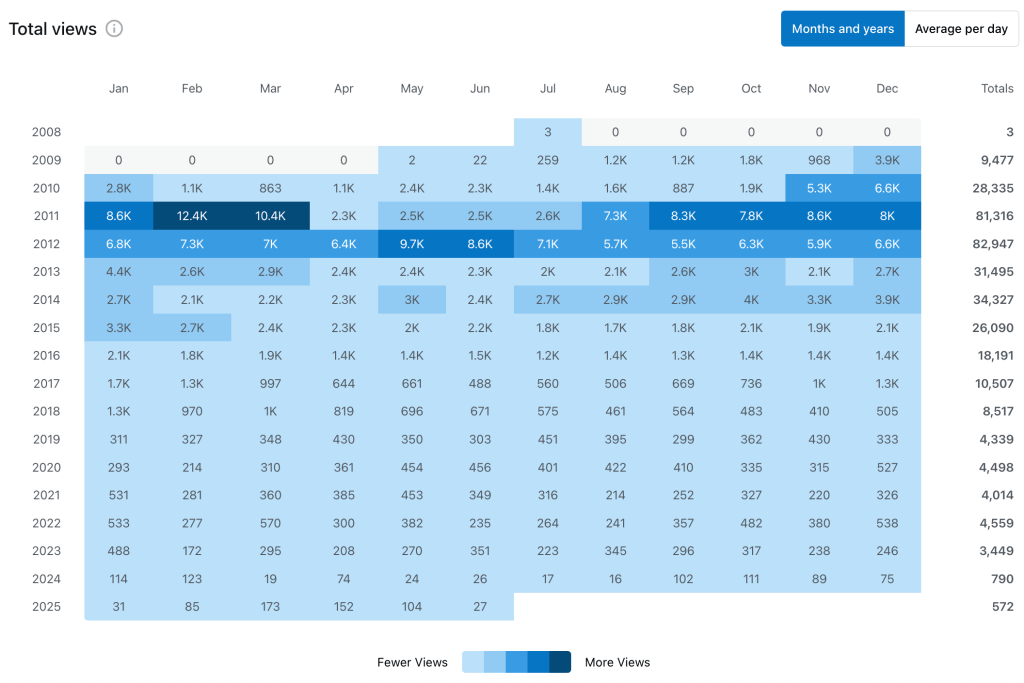

I’ve seen the graphic. It’s not a graphic, it’s a descent. A nose-dive. A digital plummet from the giddy heights of 82,947 views in 2012 (a vintage year for pixels, I recall) down, down, down to… well, let’s just say 2025 is starting to look less like a year and more like a gentle sigh. Good heavens. Is that what they call “trending downwards”? Or is it just the internet politely closing its eyes and pretending not to see us anymore? One might even say, our blog has started to… underpin its own existence, building new foundations straight into the digital subsoil.

And to add insult to injury, with a surname like Yule, one used to count on a reliable festive bump in traffic. Yule logs, Yuletide cheer – a dependable, seasonal lift as predictable as mince pies and questionable knitwear. But no more. The digital Santa seems to have forgotten our address, and the sleigh bells of seasonal SEO have gone eerily silent.

And so, here we stand, at the wake of the written blog. Pass the metaphorical tea and sympathy, won’t you? And perhaps a biscuit shaped like a broken RSS feed.

The Great Content Consumption Shuffle: Or, “Where Did Everyone Go?”

It wasn’t a sudden, cataclysmic asteroid impact, you see. More of a slow, insidious creep. Since those heady days of 2012, something shifted in the digital ether. Perhaps it was the collective attention span, slowly but surely shrinking like a woolly jumper in a hot wash. People, particularly in the West, seem to have moved from the noble act of reading to the more passive, almost meditative art of mindless staring at screens. They’ve traded thoughtful prose for the endless, hypnotic scroll through what can only be described as “garbage content.” The daily “doom scroll” became the new literary pursuit, replacing the satisfying turning of a digital page with the flick of a thumb over fleeting, insubstantial visual noise.

First, they went to the shiny, flashing lights of Social Media. “Look!” they cried, pointing at short-form videos of dancing grandmas and cats playing the ukulele, “Instant gratification! No more reading whole paragraphs! Hurrah for brevity!” And our meticulously crafted prose, our deeply researched insights, our very carefully chosen synonyms, they just… sat there. Like a beautifully prepared meal served to an empty room, while everyone else munches on fluorescent-coloured crisps down the street.

Then came the Video Content Tsunami. Suddenly, everyone needed to see things. Not just read about them. “Why describe a perfect coffee brewing technique,” they reasoned, “when you can watch a slightly-too-earnest influencer pour hot water over artisanal beans for three and a half minutes?” Blogs, meanwhile, clung to their words like barnacles to a slowly sinking ship. A very witty, well-structured, impeccably proofread sinking ship, mind you.

Adding to the despair, a couple of years back, a shadowy figure, a digital highwayman perhaps, absconded with our precious .com address. A cyber squatter, they called themselves. And ever since, they’ve been sending monthly ransom notes, demanding sums ranging from a king’s ransom ($500!) down to a mere pittance ($100!), all to return what was rightfully ours. It’s truly a testament to the glorious, unpoliced wild west of the internet, where the mere act of owning a digital patch can become a criminal enterprise. One wonders if they have a tiny, digital pirate ship to go with their ill-gotten gains.

The competition, oh, the competition! It became a veritable digital marketplace of ideas, except everyone was shouting at once, holding up signs, and occasionally performing interpretive dance. Trying to stand out as a humble blog? It was like trying to attract attention in a stampede of luminous, confetti-throwing elephants. One simply got… trampled. Poignantly, politely trampled.

So yes, the arguments for the “death” are compelling. They wear black, speak in hushed tones, and occasionally glance sadly at their wristwatches, muttering about “blog-specific traffic decline.”

But Wait! Is That a Pulse? Or Just a Twitch?

Just when you’re ready to drape a tiny, digital shroud over the whole endeavour, a faint thump-thump is heard. It’s the sound of High Percentage of Internet Users Still Reading Blogs. (Aha! Knew it! There’s always someone hiding behind the digital curtains, isn’t there?) Apparently, a “significant portion” still considers them “important for brand perception and marketing.” Bless their cotton socks, the traditionalists.

And then, the cavalry arrives, riding in on horses made of spreadsheets and budget lines: Marketers Still Heavily Invest in Blogs. A “large percentage” of them still use blogs as a “key part of their strategy,” even allocating “significant budget.” So, it seems, while the general populace may have wandered off to watch videos of people unboxing obscure Korean snacks, the Serious Business Folk still see the value. Perhaps blogs are less of a rock concert and more of a quiet, intellectual salon now. With better catering, presumably.

And why? Because blogs offer Unique Value. They provide “in-depth content,” “expertise,” and a “space for focused discussion.” Ah, depth! A quaint concept in an age of 280 characters and dancing grandmas. Expertise! A rare and exotic bird in the land of the viral meme. Focused discussion! Imagine, people actually thinking about things. It’s almost… old-fashioned. Like a perfectly brewed cup of tea that hasn’t been auto-generated by an AI or served by a three-legged donkey.

The Blog: Not Dead, Just… Evolving. Like a Digital Butterfly?

So, the verdict? The blog format is not dead. Oh no, that would be far too dramatic for something so inherently verbose. It’s simply evolving. Like a particularly stubborn species of digital amoeba, it’s adapting. It’s learning new tricks. It’s perhaps wearing a disguise.

Success now requires “adapting to the changing landscape,” which sounds suspiciously like wearing a tin foil hat and learning how to communicate telepathically with your audience. It demands “focusing on quality content,” which, let’s be honest, should always have been the plan, regardless of whether anyone was watching. And “finding unique ways to engage with audiences,” which might involve interpretive dance if all else fails.

So, while the view count might have resembled a flatlining patient chart, the blog lives. It breathes. It probably just needs a nice cup of tea, a good sit-down, and perhaps a gentle reminder that some of us still appreciate the glorious, absurd, and occasionally profound journey of the written word.

Now, if you’ll excuse me, I hear a flock of digital geese honking about a new viral trend. Must investigate. Or perhaps not. I might just stay here, where the paragraphs are safe.