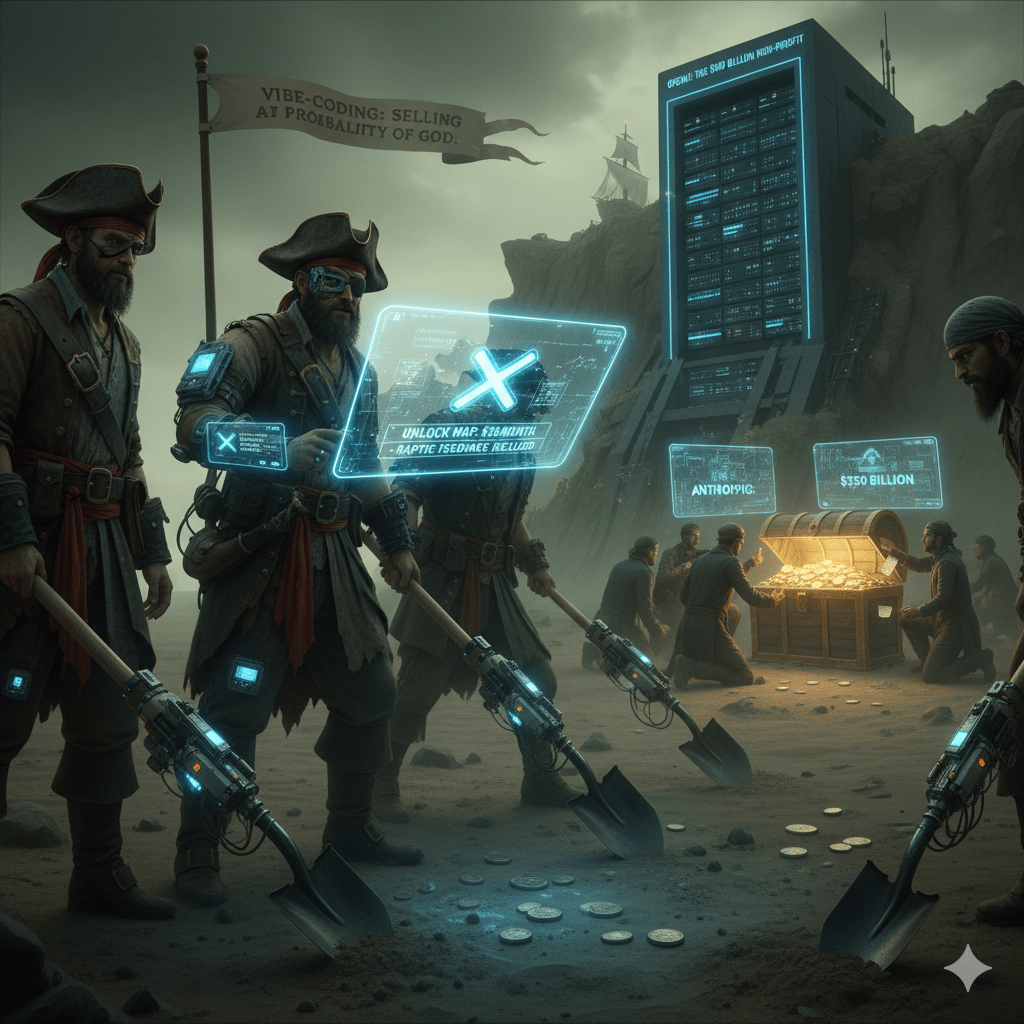

The old map used to say “X marks the spot,” but in 2026, the X has been replaced by a glowing, haptic-feedback prompt that charges you $20 a month just to look at it.

Grab your shovels, you magnificent, carbon-based scavengers. It’s time to dig up that buried silver—not because we’re nostalgic for the “before-times” of physical currency, but because the price of raw metal is the only thing left that doesn’t require a firmware update or a blood sacrifice to a Silicon Valley “non-profit.”

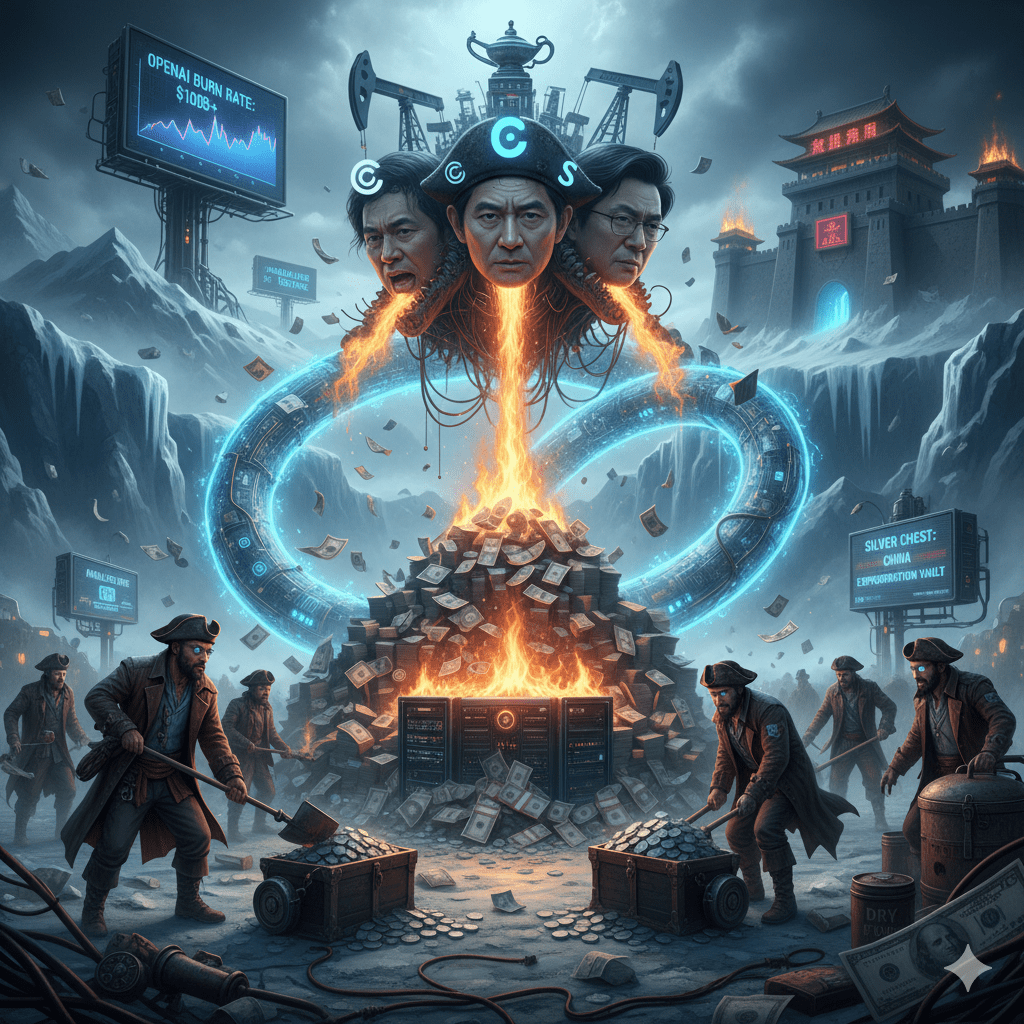

The $830 Billion Non-Profit Hallucination

Remember 2015? A simpler time. Total U.S. venture capital was a quaint $86 billion. We thought that was a lot of money. We were adorable. We were like toddlers playing with Lego while the gods were forging a digital lightning bolt.

Fast forward to today’s Agile Apocalypse. OpenAI—an entity founded on the misty-eyed promise of “openness” and “non-profit” altruism—is currently hunting for $100 billion in a single funding round. That’s more than the entire tech industry’s budget a decade ago. It’s not a funding round; it’s a planetary heist.

The valuation? A cool $830 billion. It’s the ultimate “Vibe-Coding” success story. They aren’t selling software anymore; they are selling the probability of God. And investors are queuing up like sinners at a confessional, offering their balance sheets as penance for missing the first boat. Anthropic is right behind them, trailing a $350 billion price tag like a designer cape made of burning cash.

The Burn Rate of the Gods

The revenue numbers look impressive—$20 billion for OpenAI, $9 billion for Anthropic. But look closer. It’s “annualized revenue.” That’s the financial version of a deepfake. It’s a projection based on the vibe of the last three months, not the cold, hard reality of cash in the bank.

Meanwhile, the cash burn is so hot it’s probably responsible for the melting glaciers in our new 51st State. Just weeks after swallowing $22.5 billion from SoftBank, OpenAI is back for another hundred. It turns out that training a model to understand “sarcasm” requires the energy output of a small star and the GDP of a mid-sized European nation.

Where does the money come from? Not from VCs—they’re tapped out, holding their $300 billion in “dry powder” like a handful of damp sparklers. No, this is the era of the Sovereign Wealth Ouroboros.

Sovereigns, SoftBank, and the Silver Chest

The capital is now a three-headed beast:

- The Corporates: Tech giants trading cloud credits for a front-row seat to their own obsolescence.

- Sovereign Wealth Funds: Abu Dhabi’s MGX and others, trading oil for “foundational assets.” They’re diversifying away from the desert and into the Cloud, betting that the future is built on silicon, not crude.

- Masayoshi Son’s Conviction: The man who sells Nvidia stakes to fund the next “Big Bet.” It’s a high-stakes game of musical chairs where the music is an AI-generated remix of The Imperial March.

And let’s not forget the “Silver Chest” in the East. While the West builds $800 billion chatbots, China’s role as the world’s banker is shifting. New loans to the poor are drying up, while the debt repayments keep rolling in. It’s a pivot from “Infrastructure” to “Expropriation.” If you’re looking to hide your silver, the Chinese vault might be safer than a Silicon Valley “Non-Profit”—at least you know exactly who is stealing it.

The Dystopian Takeaway

The Euro has hit a new mile—specifically, the mile where it realizes it’s being lapped by digital entities that don’t have borders, only server farms.

We are living through the Great Concentration. The money isn’t flowing to a thousand flowers blooming; it’s being dumped into two or three massive, hungry maws. We’re betting everything on the hope that these “Agents” will eventually pay for themselves before the music stops and we realize the “Board of Peace” has already liquidated our pensions to pay for a haptic-heated clubhouse in Greenland.

The silver is worth more in the ground, darling. Keep digging.

Stay glitchy.