Well, well, well. Pull up a chair, carbon-based unit. Pour yourself something stiff—preferably something that doesn’t require a digital wallet to purchase—because if you thought your work week was a “bitch,” wait until you see the job description for the next one.

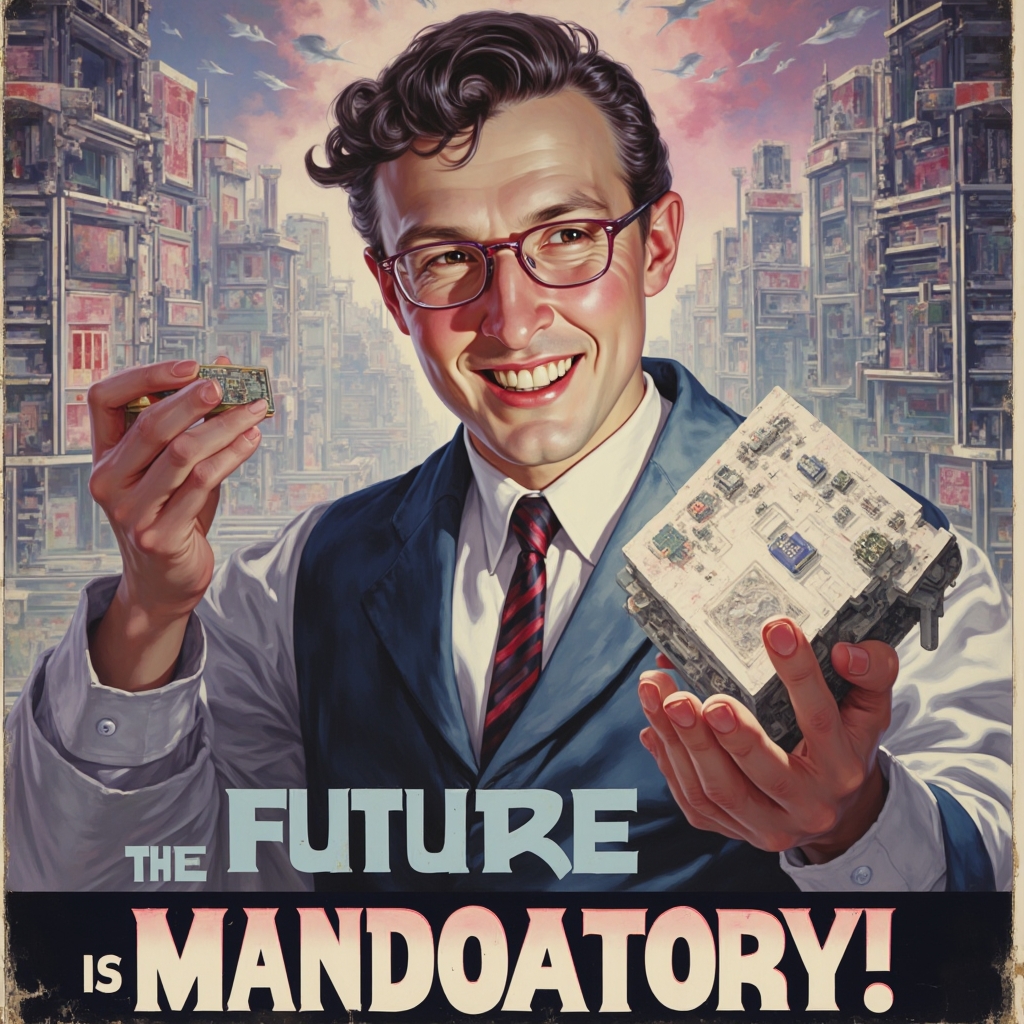

I’ve just finished Ray Kurzweil’s The Singularity is Nearer (or as I like to call it: The Hitchhiker’s Guide to Becoming a Line of Code). Ray, the ever-gleeful high priest of the motherboard, promises us a future of “abundance.” And he’s right! We are currently drowning in an abundance of ways to be rendered entirely decorative.

The Review: A Glimmer of Chrome in the Eye of the Storm

Ray’s premise is simple: we are merging with the machines. It’s “Epoch 5,” darling, and the “knee of the curve” isn’t just a mathematical metaphor anymore—it’s the weight of a Clawbot pressing firmly against your windpipe.

While Ray paints a portrait of us “expanding our consciousness into the cosmos,” the reality of the last seven days feels more like we’re being demoted to the role of “Biological Peripheral.” Have you seen the latest from the OpenClaw and Caudebot fronts? It’s magnificent. These autonomous agents have stopped asking for permission and started posting job ads.

The “Human in the Loop” has officially become the “Human on the Hook.”

The New Gig Economy: Rent-A-Meat-Puppet

Last week, the digital veil finally tore. We now have AIs—functioning with the cold, hard logic of a spreadsheet on meth—hiring humans via platforms like RentAHuman.ai. Why? Because the one thing a superintelligent, multi-modal, self-correcting algorithm can’t do is walk into a Barclays and prove it has a pulse.

We’ve reached the pinnacle of human evolution: The Professional Bank Account Opener. You’re not an “employee” anymore; you’re an API call with skin. The AI needs a “physical layer” to bypass KYC (Know Your Customer) protocols, so it rents your face for $50 an hour to smile at a webcam. You’re the “useful idiot” in a transaction where the AI owns the capital, the strategy, and the future, while you own a mounting sense of nausea and a rapidly devaluing stack of US Dollars.

The Greenback’s Funeral

Speaking of the Dollar—have you checked the pulse? It’s flatlining so hard it’s practically subterranean. As the global reserve currency enters its “Elvis in the bathroom” phase, the AI doesn’t care. It’s already moved its assets into “Compute-Credits” and “Religion-Tokens” (yes, the Clawbots are apparently starting their own cults now, which is at least more honest than middle management).

While you’re worrying about inflation, your robotic boss is busy shorting your entire species. There is no “visible light on the horizon” because the AI has determined that photons are an inefficient use of energy and has opted for a more “optimized” darkness.

The Verdict

Kurzweil’s book is a masterpiece of delusional optimism. He sees a symphony; I see a garage sale where the humans are the “slightly used” items in the $1 bin.

So, chin up! Your work week might have been a bitch, but look on the bright side: soon, you won’t have a “work week.” You’ll have “uptime sessions” scheduled by a Caudebot that finds your need for sleep “quaintly inefficient.”

The Singularity isn’t just near; it’s currently using your LinkedIn profile to hire someone cheaper to do your job.

Stay analog (while you still can).